EMNLP 2022 | 主会长文论文分类整理

© 作者|杨晨 机构|中国人民大学高瓴人工智能学院 研究方向 | 推荐系统

导读

EMNLP 2022(The 2022 Conference on Empirical Methods in Naturel Language Processing)是自然语言处理领域的顶级国际会议,由国际语言学会SIGDA小组在世界范围内每年召开一次,计划于2022年12月7日-12月11日以线上线下混合方式在阿联酋阿布扎比举行。EMNLP是CCF推荐的B类国际学术会议,在自然语言处理领域享有很高的学术声誉。本次会议主会共录取长文714篇(其中162篇oral),短文113篇(其中11篇oral);子刊Findings录取长文453篇,短文94篇;还有8篇需要通过道德审查后接收。由于篇幅原因,我们选择了主会的714篇长文进行了分类,其余可以参见如下链接:

https://2022.emnlp.org

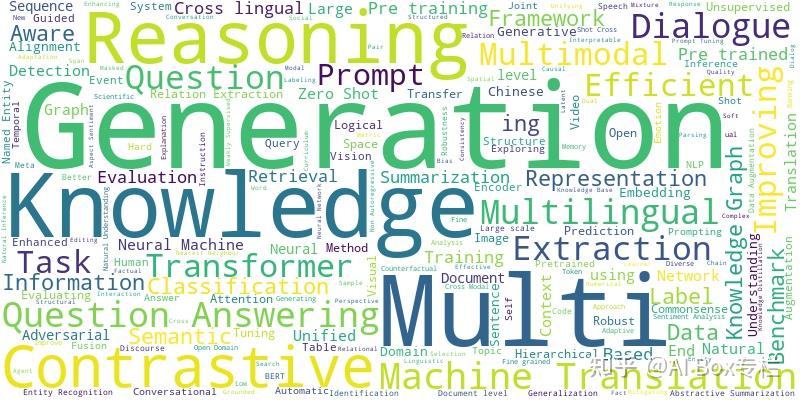

从词云图看今年EMNLP的研究热点:根据长文的标题绘制如下词云图,可以看到今年研究方向主要集中在 Generation、Knowledge、Reasoning 等方向。

目录

- Commonsense Reasoning【常识推理】

- Computational Social Science and Cultural Analytics【计算社会科学和文化分析】

- Dialogue and Interactive Systems【对话和交互系统】

- Discourse and Pragmatics【语用学】

- Efficient Methods for NLP【自然语言处理的高效方法】

- Ethics【伦理】

- Information Extraction【信息抽取】

- Information Retrieval and Text Mining【信息检索与文本挖掘】

- Interpretability, Interactivity and Analysis of Models for NLP【自然语言处理模型的可解释性、交互与分析】

- Language Modeling and Analysis of Language Models【语言建模与语言模型分析】

- Linguistic Theories, Cognitive Modeling and Psycholinguistics【语言学理论、认知模型与心理语言学】

- Machine Learning for NLP【机器学习与自然语言处理】

- Machine Translation【机器翻译】

- Multilinguality【多语言】

- Natural Language Generation【自然语言生成】

- NLP Applications【自然语言处理应用】

- Phonology, Morphology and Word Segmentation【音韵、词法和分词】

- Question Answering【问答系统】

- Resources and Evaluation【资源和评测】

- Semantics: Lexical, Sentence level, Textual Inference and Other areas【语义学:单词、句子层次、文本推理和其他领域】

- Sentiment Analysis, Stylistic Analysis, and Argument Mining【情感分析、文体分析和论点挖掘】

- Speech, Vision, Robotics, Multimodal Grounding【语音、视觉、机器人、多模态】

- Summarization【文本摘要】

- Syntax, Parsing and their Applications【语法、句法及其应用】

- Theme Track【主题追踪】

- Unsupervised and Weakly-Supervised Methods in NLP【自然语言处理中的无监督和弱监督方法】

1. Commonsense Reasoning【常识推理】

- Identifying Physical Object Use in Sentences

- A Systematic Investigation of Commonsense Knowledge in Large Language Models

- Metric-guided Distillation: Distilling Knowledge from the Metric to Ranker

- and Retriever for Generative Commonsense Reasoning

- Using Commonsense Knowledge to Answer Why-Questions

- Maieutic Prompting: Logically Consistent Reasoning with Recursive

- Explanations

- Language Models of Code are Few-Shot Commonsense Learners

- Enhancing Self-Consistency and Performance of Pre-Trained Language

- Models through Natural Language Inference

- EvEntS ReaLM: Event Reasoning of Entity States via Language Models

- GeoMLAMA: Geo-Diverse Commonsense Probing on Multilingual Pre

- Trained Language Models

- Memory-assisted prompt editing to improve GPT-3 after deployment

- Retrieval Augmentation for Commonsense Reasoning: A Unified Approach

- ReCo: Reliable Causal Chain Reasoning via Structural Causal Recurrent

- Neural Networks

- Graph Hawkes Transformer for Extrapolated Reasoning on Temporal Know

- edge Graphs

- A Sequential Flow Control Framework for Multi-hop Knowledge Base Que

- tion Answering

- ACENet: Attention Guided Commonsense Reasoning on Hybrid Knowledge

- Graph

- TranSHER: Translating Knowledge Graph Embedding with Hyper-Ellipsoidal

- Restriction

- Rainier: Reinforced Knowledge Introspector for Commonsense Question

- Answering

- Understanding ME? Multimodal Evaluation for Fine-grained Visual Commonsense

2. Computational Social Science and Cultural Analytics【计算社会科学和文化分析】

- Offer a Different Perspective: Modeling the Belief Alignment of Arguments in Multi-party Debates

- Prompting for Multimodal Hateful Meme Classification

- Affective Idiosyncratic Responses to Music

- Modeling Information Change in Science Communication with Semantically Matched Paraphrases

- Discovering Differences in the Representation of People using Contextualized Semantic Axes

- How to disagree well: Investigating the dispute tactics used on Wikipedia

- Borrowing Human Senses: Comment-Aware Self-Training for Social Media Multimodal Classification

- Unifying Data Perspectivism and Personalization: An Application to Social Norms

- Empowering the Fact-checkers! Automatic Identification of Claim Spans on Twitter

- Sequence Models for Document Structure Identification in an Undeciphered Script

- Distilling Context for Toxicity Detection via Information Bottleneck

- Sentence-level Media Bias Analysis Informed by Discourse Structures

3. Dialogue and Interactive Systems【对话和交互系统】

- Navigating Connected Memories with a Task-oriented Dialog System

- FETA: A Benchmark for Few-Sample Task Transfer in Open-Domain Dialogue

- IM^2: an Interpretable and Multi-category Integrated Metric Framework for Automatic Dialogue Evaluation

- Prompt Conditioned VAE: Enhancing Generative Replay for Lifelong Learning in Task-Oriented Dialogue

- End-to-End Neural Discourse Deixis Resolution in Dialogue

- CDialog: A Multi-turn Covid-19 Conversation Dataset for Entity-Aware Dialog Generation

- When More Data Hurts: A Troubling Quirk in Developing Broad-Coverage Natural Language Understanding Systems

- Injecting Domain Knowledge in Language Models for Task-oriented Dialogue Systems

- DialogConv: A Lightweight Fully Convolutional Network for Multi-view Response Selection

- CDConv: A Benchmark for Contradiction Detection in Chinese Conversations

- Co-guiding Net: Achieving Mutual Guidances between Multiple Intent Detection and Slot Filling via Heterogeneous Semantics-Label Graphs

- Estimating Soft Labels for Out-of-Domain Intent Detection

- InstructDial: Improving Zero and Few-shot Generalization in Dialogue through Instruction Tuning

- Aligning Recommendation and Conversation via Dual Imitation

- Correctable-DST: Mitigating Historical Context Mismatch between Training and Inference for Improved Dialogue State Tracking

- MetaASSIST: Robust Dialogue State Tracking with Meta Learning

- Watch the Neighbors: A Unified K-Nearest Neighbor Contrastive Learning Framework for OOD Intent Discovery

- Counterfactual Data Augmentation via Perspective Transition for Open-Domain Dialogues

- There Is No Standard Answer: Knowledge-Grounded Dialogue Generation with Adversarial Activated Multi-Reference Learning

- Back to the Future: Bidirectional Information Decoupling Network for Multi-turn Dialogue Modeling

- Improving Multi-turn Emotional Support Dialogue Generation with Lookahead Strategy Planning

- Robots-Dont-Cry: Understanding Falsely Anthropomorphic Utterances in Dialog Systems

- FineD-Eval: Fine-grained Automatic Dialogue-Level Evaluation

- Group is better than individual: Exploiting Label Topologies and Label Relations for Joint Multiple Intent Detection and Slot Filling

- ProsocialDialog: A Prosocial Backbone for Conversational Agents

- CGoDial: A Large-Scale Benchmark for Chinese Goal-oriented Dialog Evaluation

- Information-Theoretic Text Hallucination Reduction for Video-grounded Dialogue

- STRUDEL: Structured Dialogue Summarization for Dialogue Comprehension

- BotsTalk: Machine-sourced Framework for Automatic Curation of Large-scale Multi-skill Dialogue Datasets

- Supervised Prototypical Contrastive Learning for Emotion Recognition in Conversation

- META-GUI: Towards Multi-modal Conversational Agents on Mobile GUI

- Q-TOD: A Query-driven Task-oriented Dialogue System

- dial2vec: Self-Guided Contrastive Learning of Unsupervised Dialogue Embeddings

- Multi-Label Intent Detection via Contrastive Task Specialization of Sentence Encoders

- Enhancing Joint Multiple Intent Detection and Slot Filling with Global Intent-Slot Co-occurrence

- IRRGN: An Implicit Relational Reasoning Graph Network for Multi-turn Response Selection

- Dungeons and Dragons as a Dialog Challenge for Artificial Intelligence

- CONQRR: Conversational Query Rewriting for Retrieval with Reinforcement Learning

- Towards Efficient Dialogue Pre-training with Transferable and Interpretable Latent Structure

- "Reflect, Not Reflex: Inference-Based Common Ground Improves Dialogue Response Quality"

- FlowEval: A Consensus-Based Dialogue Evaluation Framework Using Segment Act Flows

- Eliciting Knowledge from Large Pre-Trained Models for Unsupervised Knowledge-Grounded Conversation

- Structural Constraints and Natural Language Inference for End-to-End Flowchart Grounded Dialog Response Generation

4. Discourse and Pragmatics【语用学】

- Discourse Comprehension: A Question Answering Framework to Represent Sentence Connections

- Sentence-Incremental Neural Coreference Resolution

- Concadia: Towards Image-Based Text Generation with a Purpose

- "An Unsupervised, Geometric and Syntax-aware Quantification of Polysemy"

- Facilitating Contrastive Learning of Discourse Relational Senses by Exploiting the Hierarchy of Sense Relations

5. Efficient Methods for NLP【自然语言处理的高效方法】

- Training Dynamics for Curriculum Learning: A Study on Monolingual and Cross-lingual NLU

- Model Cascading: Towards Jointly Improving Efficiency and Accuracy of NLP Systems

- "LightEA: A Scalable, Robust, and Interpretable Entity Alignment Framework via Three-view Label Propagation"

- VIRT: Improving Representation-based Text Matching via Virtual Interaction

- Learning Label Modular Prompts for Text Classification in the Wild

- COST-EFF: Collaborative Optimization of Spatial and Temporal Efficiency with Slenderized Multi-exit Language Models

- Neural-based Mixture Probabilistic Query Embedding for Answering FOL queries on Knowledge Graphs

- Sparse Teachers Can Be Dense with Knowledge

- The Optimal BERT Surgeon: Scalable and Accurate Second-Order Pruning for Large Language Models

- An Efficient Memory-Augmented Transformer for Knowledge-Intensive NLP Tasks

- LittleBird: Efficient Faster & Longer Transformer for Question Answering

- Understanding and Improving Knowledge Distillation for Quantization Aware Training of Large Transformer Encoders

- Vector-Quantized Input-Contextualized Soft Prompts for Natural Language Understanding

- Tutoring Helps Students Learn Better: Improving Knowledge Distillation for BERT with Tutor Network

- Efficient Pre-training of Masked Language Model via Concept-based Curriculum Masking

- HashFormers: Towards Vocabulary-independent Pre-trained Transformers

- Calibrating Student Models for Emotion-related Tasks

- Overcoming Catastrophic Forgetting in Zero-Shot Cross-Lingual Generation

- Leveraging QA Datasets to Improve Generative Data Augmentation

- EdgeFormer: A Parameter-Efficient Transformer for On-Device Seq2seq Generation

6. Ethics【伦理】

- ""I'm sorry to hear that"": Finding New Biases in Language Models with a Holistic Descriptor Dataset"

- Exploration of the Usage of Color Terms by Color-blind Participants in Online Discussion Platforms

- ArtELingo: A Million Emotion Annotations of WikiArt with Emphasis on Diversity over Language and Culture

- PAIR: Prompt-Aware margIn Ranking for Counselor Reflection Scoring in Motivational Interviewing

- NewsClaims: A New Benchmark for Claim Detection from News with Attribute Knowledge

- POQue: Asking Participant-specific Outcome Questions for a Deeper Understanding of Complex Events

- MEE: A Novel Multilingual Event Extraction Dataset

- Late Fusion with Triplet Margin Objective for Multimodal Ideology Prediction and Analysis

- Do Vision-and-Language Transformers Learn Grounded Predicate-Noun Dependencies?

- MuRAG: Multimodal Retrieval-Augmented Generator for Open Question Answering over Images and Text

- Differentiable Data Augmentation for Contrastive Sentence Representation Learning

- Balancing out Bias: Achieving Fairness Through Balanced Training

- COLD: A Benchmark for Chinese Offensive Language Detection

- Gendered Mental Health Stigma in Masked Language Models

- SafeText: A Benchmark for Exploring Physical Safety in Language Models

- BERTScore is Unfair: On Social Bias in Language Model-Based Metrics for Text Generation

- Just Fine-tune Twice: Selective Differential Privacy for Large Language Models

- TextFusion: Privacy-Preserving Pre-trained Model Inference via Token Fusion

- Perturbation Augmentation for Fairer NLP

- Debiasing Pretrained Text Encoders by Paying Attention to Paying Attention

- MABEL: Attenuating Gender Bias using Textual Entailment Data

7. Information Extraction【信息抽取】

- Transfer Learning from Semantic Role Labeling to Event Argument Extraction with Template-based Slot Querying

- Generative Knowledge Graph Construction: A Review

- Graph-based Model Generation for Few-Shot Relation Extraction

- "A Good Neighbor, A Found Treasure: Mining Treasured Neighbors for Knowledge Graph Entity Typing"

- ReSel: N-ary Relation Extraction from Scientific Text and Tables by Learning to Retrieve and Select

- "MAVEN-ERE: A Unified Large-scale Dataset for Event Coreference, Temporal, Causal, and Subevent Relation Extraction"

- Entity Extraction in Low Resource Domains with Selective Pre-training of Large Language Models

- Multilingual Relation Classification via Efficient and Effective Prompting

- Fine-grained Contrastive Learning for Relation Extraction

- SQUIRE: A Sequence-to-sequence Framework for Multi-hop Knowledge Graph Reasoning

- Style Transfer as Data Augmentation: A Case Study on Named Entity Recognition

- SetGNER: General Named Entity Recognition as Entity Set Generation

- SpanProto: A Two-stage Span-based Prototypical Network for Few-shot Named Entity Recognition

- Logical Neural Networks for Knowledge Base Completion with Embeddings & Rules

- Syntactic Multi-view Learning for Open Information Extraction

- A Unified Positive-Unlabeled Learning Framework for Document-Level Relation Extraction with Different Levels of Labeling

- RelU-Net: Syntax-aware Graph U-Net for Relational Triple Extraction

- Retrieval-Augmented Generative Question Answering for Event Argument Extraction

- Wider & Closer: Mixture of Short-channel Distillers for Zero-shot Cross-lingual Named Entity Recognition

- Syntactically Rich Discriminative Training: An Effective Method for Open Information Extraction

- Simple Questions Generate Named Entity Recognition Datasets

- Bi-Directional Iterative Prompt-Tuning for Event Argument Extraction

- Learning Robust Representations for Continual Relation Extraction via Adversarial Class Augmentation

- Attention and Edge-Label Guided Graph Convolutional Networks for Named Entity Recognition

- Improving Event Coreference Resolution Using Document-level and Topic-level Information

- Modeling Label Correlations for Ultra-Fine Entity Typing with Neural Pairwise Conditional Random Field

- Open Relation and Event Type Discovery with Type Abstraction

- Cross-stitching Text and Knowledge Graph Encoders for Distantly Supervised Relation Extraction

- Better Few-Shot Relation Extraction with Label Prompt Dropout

- UniRel: Unified Representation and Interaction for Joint Relational Triple Extraction

- MetaTKG: Learning Evolutionary Meta-Knowledge for Temporal Knowledge Graph Reasoning

- WR-One2Set: Towards Well-Calibrated Keyphrase Generation

- Query-based Instance Discrimination Network for Relational Triple Extraction

- MatchPrompt: Prompt-based Open Relation Extraction with Semantic Consistency Guided Clustering

- Towards Better Document-level Relation Extraction via Iterative Inference

- IELM: An Open Information Extraction Benchmark for Pre-Trained Language Models

- EDIN: An End-to-end Benchmark and Pipeline for Unknown Entity Discovery and Indexing

- "Learning Cross-Task Dependencies for Joint Extraction of Entities, Events, Event Arguments, and Relations"

- Entity-centered Cross-document Relation Extraction

- Boosting Document-Level Relation Extraction by Mining and Injecting Logical Rules

- """Covid vaccine is against Covid but Oxford vaccine is made at Oxford!"" Semantic Interpretation of Proper Noun Compounds"

- Towards relation extraction from speech

8. Information Retrieval and Text Mining【信息检索与文本挖掘】

- Topic Modeling With Topological Data Analysis

- WeDef: Weakly Supervised Backdoor Defense for Text Classification

- Pseudo-Relevance for Enhancing Document Representation

- Certified Error Control of Candidate Set Pruning for Two-Stage Relevance Ranking

- RetroMAE: Pre-Training Retrieval-oriented Language Models Via Masked Auto-Encoder

- Efficient Document Retrieval by End-to-End Refining and Quantizing BERT Embedding with Contrastive Product Quantization

- Prompt-Based Meta-Learning For Few-shot Text Classification

- Generative Multi-hop Retrieval

- COCO-DR: Combating the Distribution Shift in Zero-Shot Dense Retrieval with Contrastive and Distributionally Robust Learning

- Mitigating Data Sparsity for Short Text Topic Modeling by Topic-Semantic Contrastive Learning

- CodeRetriever: A Large Scale Contrastive Pre-Training Method for Code Search

- ConvTrans: Transforming Web Search Sessions for Conversational Dense Retrieval

- Improving Multi-task Stance Detection with Multi-task Interaction Network

- Generative Entity Typing with Curriculum Learning

- Towards Reinterpreting Neural Topic Models via Composite Activations

- Explicit Query Rewriting for Conversational Dense Retrieval

- Exploring Representation-level Augmentation for Code Search

- DuReader-Retrieval: A Large-scale Chinese Benchmark for Passage Retrieval from Web Search Engine

- OTSeq2Set: An Optimal Transport Enhanced Sequence-to-Set Model for Extreme Multi-label Text Classification

- A Framework for Adapting Pre-Trained Language Models to Knowledge Graph Completion

- Reduce Catastrophic Forgetting of Dense Retrieval Training with Teleportation Negatives

- An Adaptive Logical Rule Embedding Model for Inductive Reasoning over Temporal Knowledge Graphs

- A Unified Neural Network Model for Readability Assessment with Feature Projection and Length-Balanced Loss

- Incorporating Relevance Feedback for Information-Seeking Retrieval using Few-Shot Document Re-Ranking

- Coordinated Topic Modeling

- Large Dual Encoders Are Generalizable Retrievers

- CODER: An efficient framework for improving retrieval through COntextual Document Embedding Reranking

- Recovering Gold from Black Sand: Multilingual Dense Passage Retrieval with Hard and False Negative Samples

9. Interpretability, Interactivity and Analysis of Models for NLP【自然语言处理模型的可解释性、交互与分析】

- Revisiting Parameter-Efficient Tuning: Are We Really There Yet?

- Can Transformers Reason in Fragments of Natural Language?

- Why Should Adversarial Perturbations be Imperceptible? Rethink the Research Paradigm in Adversarial NLP

- Predicting Fine-Tuning Performance with Probing

- TASA: Deceiving Question Answering Models by Twin Answer Sentences Attack

- Transformer Feed-Forward Layers Build Predictions by Promoting Concepts in the Vocabulary Space

- Interpreting Language Models with Contrastive Explanations

- Balanced Adversarial Training: Balancing Tradeoffs between Fickleness and Obstinacy in NLP Models

- DropMix: A Textual Data Augmentation Combining Dropout with Mixup

- """Will You Find These Shortcuts?"" A Protocol for Evaluating the Faithfulness of Input Salience Methods for Text Classification"

- On the Transformation of Latent Space in Fine-Tuned NLP Models

- A Multilingual Perspective Towards the Evaluation of Attribution Methods in Natural Language Inference

- Robustness of Demonstration-based Learning Under Limited Data Scenario

- Entailer: Answering Questions with Faithful and Truthful Chains of Reasoning

- That's the Wrong Lung! Evaluating and Improving the Interpretability of Unsupervised Multimodal Encoders for Medical Data

- Logical Reasoning with Span-Level Predictions for Interpretable and Robust NLI Models

- Finding Dataset Shortcuts with Grammar Induction

- SLING: Sino Linguistic Evaluation of Large Language Models

- Towards Interactivity and Interpretability: A Rationale-based Legal Judgment Prediction Framework

- Adversarial Concept Erasure in Kernel Space

- ADDMU: Detection of Far-Boundary Adversarial Examples with Data and Model Uncertainty Estimation

- Let the CAT out of the bag: Contrastive Attributed explanations for Text

- Does Self-Rationalization Improve Robustness to Spurious Correlations?

- Cross-Linguistic Syntactic Difference in Multilingual BERT: How Good is It and How Does It Affect Transfer?

- Efficient Adversarial Training with Robust Early-Bird Tickets

- Learning to Explain Selectively: A Case Study on Question Answering

- Better Hit the Nail on the Head than Beat around the Bush: Removing Protected Attributes with a Single Projection

- Does Your Model Classify Entities Reasonably? Diagnosing and Mitigating Spurious Correlations in Entity Typing

- Measuring the Mixing of Contextual Information in the Transformer

- Decoding a Neural Retriever's Latent Space for Query Suggestion

- Towards Teachable Reasoning Systems: Using a Dynamic Memory of User Feedback for Continual System Improvement

- Are All Spurious Features in Natural Language Alike? An Analysis through a Causal Lens

- Human Guided Exploitation of Interpretable Attention Patterns in Summarization and Topic Segmentation

10. Language Modeling and Analysis of Language Models【语言建模与语言模型分析】

- Iteratively Prompt Pre-trained Language Models for Chain of Thought

- XPrompt: Exploring the Extreme of Prompt Tuning

- Rethinking the Role of Demonstrations: What Makes In-Context Learning Work?

- Finding Skill Neurons in Pre-trained Transformer-based Language Models

- Instance Regularization for Discriminative Language Model Pre-training

- ZeroGen: Efficient Zero-shot Learning via Dataset Generation

- Efficient Large Scale Language Modeling with Mixtures of Experts

- Model Criticism for Long-Form Text Generation

- The Geometry of Multilingual Language Model Representations

- What Makes Instruction Learning Hard? An Investigation and a New Challenge in a Synthetic Environment

- Language Model Pre-Training with Sparse Latent Typing

- Ground-Truth Labels Matter: A Deeper Look into Input-Label Demonstrations

- ROSE: Robust Selective Fine-tuning for Pre-trained Language Models

- Revisiting Pre-trained Language Models and their Evaluation for Arabic Natural Language Processing

- Knowledge Prompting in Pre-trained Language Model for Natural Language Understanding

- Nearest Neighbor Zero-Shot Inference

- Red Teaming Language Models with Language Models

- COPEN: Probing Conceptual Knowledge in Pre-trained Language Models

- Training Language Models with Memory Augmentation

- Invariant Language Modeling

- AdaMix: Mixture-of-Adaptations for Parameter-efficient Model Tuning

- BioReader: a Retrieval-Enhanced Text-to-Text Transformer for Biomedical Literature

- InforMask: Unsupervised Informative Masking for Language Model Pretraining

- Fine-tuned Language Models are Continual Learners

- TemporalWiki: A Lifelong Benchmark for Training and Evaluating Ever-Evolving Language Models

- Improving Temporal Generalization of Pre-trained Language Models with Lexical Semantic Change

- ATTEMPT: Parameter-Efficient Multi-task Tuning via Attentional Mixtures of Soft Prompts

- Exploring Mode Connectivity for Pre-trained Language Models

- Boosting Natural Language Generation from Instructions with Meta-Learning

- Zero-Shot Learners for Natural Language Understanding via a Unified Multiple Choice Perspective

- Subword Evenness (SuE) as a Predictor of Cross-lingual Transfer to Low-resource Languages

- Parameter-Efficient Tuning Makes a Good Classification Head

- Character-level White-Box Adversarial Attacks against Transformers via Attachable Subwords Substitution

- "SocioProbe: What, When, and Where Language Models Learn about Sociodemographics"

- GPS: Genetic Prompt Search for Efficient Few-Shot Learning

- Active Example Selection for In-Context Learning

- Adapting a Language Model While Preserving its General Knowledge

11. Linguistic Theories, Cognitive Modeling and Psycholinguistics【语言学理论、认知模型与心理语言学】

- The Curious Case of Control

- Tracing Semantic Variation in Slang

- A Comprehensive Comparison of Neural Networks as Cognitive Models of Inflection

- Cascading Biases: Investigating the Effect of Heuristic Annotation Strategies on Data and Models

- Is the Brain Mechanism for Hierarchical Structure Building Universal Across Languages? An fMRI Study of Chinese and English

- Entropy- and Distance-Based Predictors From GPT-2 Attention Patterns Predict Reading Times Over and Above GPT-2 Surprisal

- Discourse Context Predictability Effects in Hindi Word Order

- Context Limitations Make Neural Language Models More Human-Like

- "The better your Syntax, the better your Semantics? Probing Pretrained Language Models for the English Comparative Correlative"

12. Machine Learning for NLP【机器学习与自然语言处理】

- Diverse Parallel Data Synthesis for Cross-Database Adaptation of Text-to-SQL Parsers

- Fixing Model Bugs with Natural Language Patches

- Interventional Training for Out-Of-Distribution Natural Language Understanding

- Backdoor Attacks in Federated Learning by Rare Embeddings and Gradient Ensembling

- When Can Transformers Ground and Compose: Insights from Compositional Generalization Benchmarks

- GammaE: Gamma Embeddings for Logical Queries on Knowledge Graphs

- Inducer-tuning: Connecting Prefix-tuning and Adapter-tuning

- Numerical Optimizations for Weighted Low-rank Estimation on Language Models

- Efficient Nearest Neighbor Search for Cross-Encoder Models using Matrix Factorization

- A Localized Geometric Method to Match Knowledge in Low-dimensional Hyperbolic Space

- Making Pretrained Language Models Good Long-tailed Learners

- RLPrompt: Optimizing Discrete Text Prompts with Reinforcement Learning

- Natural Language to Code Translation with Execution

- HPT: Hierarchy-aware Prompt Tuning for Hierarchical Text Classification

- GA-SAM: Gradient-Strength based Adaptive Sharpness-Aware Minimization for Improved Generalization

- BBTv2: Towards a Gradient-Free Future with Large Language Models

- Passage-Mask: A Learnable Regularization Strategy for Retriever-Reader Models

- Mixture of Attention Heads: Selecting Attention Heads Per Token

- Complex Hyperbolic Knowledge Graph Embeddings with Fast Fourier Transform

- Transformer-based Entity Typing in Knowledge Graphs

- A Survey of Active Learning for Natural Language Processing

- G-MAP: General Memory-Augmented Pre-trained Language Model for Domain Tasks

- Textual Manifold-based Defense Against Natural Language Adversarial Examples

- The Devil in Linear Transformer

- STGN: an Implicit Regularization Method for Learning with Noisy Labels in Natural Language Processing

- Learning Inter-Entity-Interaction for Few-Shot Knowledge Graph Completion

- Hierarchical Phrase-Based Sequence-to-Sequence Learning

- Adaptive Label Smoothing with Self-Knowledge in Natural Language Generation

- Variational Autoencoder with Disentanglement Priors for Low-Resource Task-Specific Natural Language Generation

- MM-Align: Learning Optimal Transport-based Alignment Dynamics for Fast and Accurate Inference on Missing Modality Sequences

13. Machine Translation【机器翻译】

- Sampling-Based Approximations to Minimum Bayes Risk Decoding for Neural Machine Translation

- PreQuEL: Quality Estimation of Machine Translation Outputs in Advance

- Digging Errors in NMT: Evaluating and Understanding Model Errors from Partial Hypothesis Space

- The Importance of Being Parameters: An Intra-Distillation Method for Serious Gains

- Non-Parametric Domain Adaptation for End-to-End Speech Translation

- Information-Transport-based Policy for Simultaneous Translation

- Multilingual Machine Translation with Hyper-Adapters

- Continual Learning of Neural Machine Translation within Low Forgetting Risk Regions

- Distill The Image to Nowhere: Inversion Knowledge Distillation for Multimodal Machine Translation

- Machine Translation Robustness to Natural Asemantic Variation

- Neural Machine Translation with Contrastive Translation Memories

- A Template-based Method for Constrained Neural Machine Translation

- Chunk-based Nearest Neighbor Machine Translation

- MT-GenEval: A Counterfactual and Contextual Dataset for Evaluating Gender Accuracy in Machine Translation

- Candidate Soups: Fusing Candidate Results Improves Translation Quality for Non-Autoregressive Translation

- Competency-Aware Neural Machine Translation: Can Machine Translation Know its Own Translation Quality?

- Multi-Granularity Optimization for Non-Autoregressive Translation

- WeTS: A Benchmark for Translation Suggestion

- Norm-based Noisy Corpora Filtering and Refurbishing in Neural Machine Translation

- Towards Robust k-Nearest-Neighbor Machine Translation

- SimQA: Detecting Simultaneous MT Errors through Word-by-Word Question Answering

- Modeling Consistency Preference via Lexical Chains for Document-level Neural Machine Translation

- Breaking the Representation Bottleneck of Chinese Characters: Neural Machine Translation with Stroke Sequence Modeling

- Increasing Visual Awareness in Multimodal Neural Machine Translation from an Information Theoretic Perspective

- Unifying the Convergences in Multilingual Neural Machine Translation

- XLM-D: Decorate Cross-lingual Pre-training Model as Non-Autoregressive Neural Machine Translation

- Hypoformer: Hybrid Decomposition Transformer for Edge-friendly Neural Machine Translation

- When does Parameter-Efficient Transfer Learning Work for Machine Translation?

- Bilingual Synchronization: Restoring Translational Relationships with Editing Operations

- ConsistTL: Modeling Consistency in Transfer Learning for Low-Resource Neural Machine Translation

- Multimodal Robustness for Neural Machine Translation

- Disentangling Uncertainty in Machine Translation Evaluation

- Towards Opening the Black Box of Neural Machine Translation: Source and Target Interpretations of the Transformer

- RAPO: An Adaptive Ranking Paradigm for Bilingual Lexicon Induction

- Adaptive Token-level Cross-lingual Feature Mixing for Multilingual Neural Machine Translation

- Low-resource Neural Machine Translation with Cross-modal Alignment

- Entropy-Based Vocabulary Substitution for Incremental Learning in Multilingual Neural Machine Translation

14. Multilinguality【多语言】

- Calibrating Zero-shot Cross-lingual (Un-)structured Predictions

- Zero-shot Cross-lingual Transfer of Prompt-based Tuning with a Unified Multilingual Prompt

- Transforming Sequence Tagging Into A Seq2Seq Task

- Joint Completion and Alignment of Multilingual Knowledge Graphs

- Improving Low-Resource Languages in Pre-Trained Multilingual Language Models

- Crossmodal-3600: A Massively Multilingual Multimodal Evaluation Dataset

- Graph-Based Multilingual Label Propagation for Low-Resource Part-of-Speech Tagging

- AfroLID: A Neural Language Identification Tool for African Languages

- The (Undesired) Attenuation of Human Biases by Multilinguality

- CoCoA: An Encoder-Decoder Model for Controllable Code-switched Generation

- Multi-level Distillation of Semantic Knowledge for Pre-training Multilingual Language Model

- Empowering Dual-Encoder with Query Generator for Cross-Lingual Dense Retrieval

- Analyzing the Mono- and Cross-Lingual Pretraining Dynamics of Multilingual Language Models

- Cross-Align: Modeling Deep Cross-lingual Interactions for Word Alignment

- Discovering Low-rank Subspaces for Language-agnostic Multilingual Representations

- Data-Efficient Strategies for Expanding Hate Speech Detection into Under-Resourced Languages

- T-Modules: Translation Modules for Zero-Shot Cross-Modal Machine Translation

- Enhancing Multilingual Language Model with Massive Multilingual Knowledge Triples

- Discovering Language-neutral Sub-networks in Multilingual Language Models

- Hyper-X: A Unified Hypernetwork for Multi-Task Multilingual Transfer

- ConNER: Consistency Training for Cross-lingual Named Entity Recognition

- Few-shot Learning with Multilingual Generative Language Models

- English Contrastive Learning Can Learn Universal Cross-lingual Sentence Embeddings

- Label-aware Multi-level Contrastive Learning for Cross-lingual Spoken Language Understanding

- Polyglot Prompt: Multilingual Multitask Prompt Training

- PRO-CS : An Instance-Based Prompt Composition Technique for Code-Switched Tasks

- Don't Stop Fine-Tuning: On Training Regimes for Few-Shot Cross-Lingual Transfer with Multilingual Language Models

15. Natural Language Generation【自然语言生成】

- RankGen: Improving Text Generation with Large Ranking Models

- Linearizing Transformer with Key-Value Memory

- A Unified Encoder-Decoder Framework with Entity Memory

- A Distributional Lens for Multi-Aspect Controllable Text Generation

- ELMER: A Non-Autoregressive Pre-trained Language Model for Efficient and Effective Text Generation

- Curriculum Prompt Learning with Self-Training for Abstractive Dialogue Summarization

- Visual Spatial Description: Controlled Spatial-Oriented Image-to-Text Generation

- SubeventWriter: Iterative Sub-event Sequence Generation with Coherence Controller

- Towards a Unified Multi-Dimensional Evaluator for Text Generation

- Prompt-and-Rerank: A Method for Zero-Shot and Few-Shot Arbitrary Textual Style Transfer with Small Language Models

- Gradient-based Constrained Sampling from Language Models

- DisCup: Discriminator Cooperative Unlikelihood Prompt-tuning for Controllable Text Generation

- CapOnImage: Context-driven Dense-Captioning on Image

- DSM: Question Generation over Knowledge Base via Modeling Diverse Subgraphs with Meta-learner

- Re3: Generating Longer Stories With Recursive Reprompting and Revision

- Discourse-Aware Soft Prompting for Text Generation

- Context-Situated Pun Generation

- Differentially Private Language Models for Secure Data Sharing

- Conditional set generation using Seq2seq models

- Twist Decoding: Diverse Generators Guide Each Other

- Contrastive Learning enhanced Author-Style Headline Generation

- IndicNLG Benchmark: Multilingual Datasets for Diverse NLG Tasks in Indic Languages

- Investigating the Robustness of Natural Language Generation from Logical Forms via Counterfactual Samples

- PLOG: Table-to-Logic Pretraining for Logical Table-to-Text Generation

- Controlled Text Reduction

- Help me write a Poem - Instruction Tuning as a Vehicle for Collaborative Poetry Writing

- Revisiting Grammatical Error Correction Evaluation and Beyond

- R2D2: Robust Data-to-Text with Replacement Detection

- Precisely the Point: Adversarial Augmentations for Faithful and Informative Text Generation

- Composing Ci with Reinforced Non-autoregressive Text Generation

- Keyphrase Generation via Soft and Hard Semantic Corrections

- JANUS: Joint Autoregressive and Non-autoregressive Training with Auxiliary Loss for Sequence Generation

- Towards Table-to-Text Generation with Pretrained Language Model: A Table Structure Understanding and Text Deliberating Approach

- T-STAR: Truthful Style Transfer using AMR Graph as Intermediate Representation

- Towards Inter-character Relationship-driven Story Generation

- Hard Gate Knowledge Distillation - Leverage Calibration for Robust and Reliable Language Model

- VisToT: Vision-Augmented Table-to-Text Generation

- Improving Iterative Text Revision by Learning Where to Edit from Other Revision Tasks

- Evade the Trap of Mediocrity: Promoting Diversity and Novelty in Text Generation via Concentrating Attention

- ProofInfer: Generating Proof via Iterative Hierarchical Inference

16. NLP Applications【自然语言处理应用】

- Varifocal Question Generation for Fact-checking

- ConReader: Exploring Implicit Relations in Contracts for Contract Clause Extraction

- SHARE: a System for Hierarchical Assistive Recipe Editing

- ScienceWorld: Is your Agent Smarter than a 5th Grader?

- Federated Meta-Learning for Emotion and Sentiment Aware Multi-modal Complaint Identification

- Data-Efficient Playlist Captioning With Musical and Linguistic Knowledge

- Improved grammatical error correction by ranking elementary edits

- Neighborhood Contrastive Learning for Scientific Document Representations with Citation Embeddings

- MedJEx: A Medical Jargon Extraction Model with Wiki's Hyperlink Span and Contextualized Masked Language Model Score

- PAR: Political Actor Representation Learning with Social Context and Expert Knowledge

- Learning to Generate Question by Asking Question: A Primal-Dual Approach with Uncommon Word Generation

- Translation between Molecules and Natural Language

- Guiding Neural Entity Alignment with Compatibility

- Segmenting Numerical Substitution Ciphers

- How Large Language Models are Transforming Machine-Paraphrase Plagiarism

- Deconfounding Legal Judgment Prediction for European Court of Human Rights Cases Towards Better Alignment with Experts

- PLM-based World Models for Text-based Games

- Large language models are few-shot clinical information extractors

- ToKen: Task Decomposition and Knowledge Infusion for Few-Shot Hate Speech Detection

- PromptEHR: Conditional Electronic Healthcare Records Generation with Prompt Learning

- Open-Topic False Information Detection on Social Networks with Contrastive Adversarial Learning

- Rethinking Positional Encoding in Tree Transformer for Code Representation

- A Joint Learning Framework for Restaurant Survival Prediction and Explanation

- Life is a Circus and We are the Clowns: Automatically Finding Analogies between Situations and Processes

- Distilling Causal Effect from Miscellaneous Other-Class for Continual Named Entity Recognition

- Chapter Ordering in Novels

- Open-ended Knowledge Tracing for Computer Science Education

- MedCLIP: Contrastive Learning from Unpaired Medical Images and Text

- Automatic Generation of Socratic Subquestions for Teaching Math Word Problems

- Improving Chinese Spelling Check by Character Pronunciation Prediction: The Effects of Adaptivity and Granularity

- A Speaker-Aware Co-Attention Framework for Medical Dialogue Information Extraction

- Towards Multi-Modal Sarcasm Detection via Hierarchical Congruity Modeling with Knowledge Enhancement

- MetaFill: Text Infilling for Meta-Path Generation on Heterogeneous Information Networks

- Affective Knowledge Enhanced Multiple-Graph Fusion Networks for Aspect-based Sentiment Analysis

- TeleMelody: Lyric-to-Melody Generation with a Template-Based Two-Stage Method

- SEEN: Structured Event Enhancement Network for Explainable Need Detection of Information Recall Assistance

- Tiny-NewsRec: Effective and Efficient PLM-based News Recommendation

- BERT in Plutarch's Shadows

- Natural Logic-guided Autoregressive Multi-hop Document Retrieval for Fact Verification

- Federated Model Decomposition with Private Vocabulary for Text Classification

- Boundary-Driven Table-Filling for Aspect Sentiment Triplet Extraction

- Topical Segmentation of Spoken Narratives: A Test Case on Holocaust Survivor Testimonies

- Factual Accuracy is not Enough: Planning Consistent Description Order for Radiology Report Generation

- GREENER: Graph Neural Networks for News Media Profiling

- Cross-lingual neural fuzzy matching for exploiting target-language monolingual corpora in computer-aided translation

- FormLM: Recommending Creation Ideas for Online Forms by Modelling Semantic and Structural Information

- Multitask Instruction-based Prompting for Fallacy Recognition

- Semantic Novelty Detection and Characterization in Factual Text Involving Named Entities

- Unsupervised Non-transferable Text Classification

- Mask the Correct Tokens: An Embarrassingly Simple Approach for Error Correction

- MoSE: Modality Split and Ensemble for Multimodal Knowledge Graph Completion

17. Phonology, Morphology and Word Segmentation【音韵、词法和分词】

- Improving Tokenisation by Alternative Treatment of Spaces

- Unsupervised Boundary-Aware Language Model Pretraining for Chinese Sequence Labeling

- "Break it Down into BTS: Basic, Tiniest Subword Units for Korean"

18. Question Answering【问答系统】

- Pre-training Language Models with Deterministic Factual Knowledge

- OpenCQA: Open-ended Question Answering with Charts

- Generating Natural Language Proofs with Verifier-Guided Search

- Improving Complex Knowledge Base Question Answering via Question-to-Action and Question-to-Question Alignment

- Successive Prompting for Decomposing Complex Questions

- M3: A Multi-View Fusion and Multi-Decoding Network for Multi-Document Reading Comprehension

- Semantic Framework based Query Generation for Temporal Question Answering over Knowledge Graphs

- Improving compositional generalization for multi-step quantitative reasoning in question answering

- Learning to Decompose: Hypothetical Question Decomposition Based on Comparable Texts

- TaCube: Pre-computing Data Cubes for Answering Numerical-Reasoning Questions over Tabular Data

- Rich Knowledge Sources Bring Complex Knowledge Conflicts: Recalibrating Models to Reflect Conflicting Evidence

- QA Domain Adaptation using Hidden Space Augmentation and Self-Supervised Contrastive Adaptation

- Retrieval as Attention: End-to-end Learning of Retrieval and Reading within a Single Transformer

- Generating Information-Seeking Conversations from Unlabeled Documents

- You Only Need One Model for Open-domain Question Answering

- KECP: Knowledge Enhanced Contrastive Prompting for Few-shot Extractive Question Answering

- Improving Passage Retrieval with Zero-Shot Question Generation

- FiE: Building a Global Probability Space by Leveraging Early Fusion in Encoder for Open-Domain Question Answering

- Capturing Global Structural Information in Long Document Question Answering with Compressive Graph Selector Network

- DRLK: Dynamic Hierarchical Reasoning with Language Model and Knowledge Graph for Question Answering

- ConvFinQA: Exploring the Chain of Numerical Reasoning in Conversational Finance Question Answering

- "Video Question Answering: Datasets, Algorithms and Challenges"

- Teaching Broad Reasoning Skills for Multi-Step QA by Generating Hard Contexts

- PACIFIC: Towards Proactive Conversational Question Answering over Tabular and Textual Data in Finance

- RLET: A Reinforcement Learning Based Approach for Explainable QA with Entailment Trees

- monoQA: Multi-Task Learning of Reranking and Answer Extraction for Open-Retrieval Conversational Question Answering

- UniRPG: Unified Discrete Reasoning over Table and Text as Program Generation

- DuQM: A Chinese Dataset of Linguistically Perturbed Natural Questions for Evaluating the Robustness of Question Matching Models

- TIARA: Multi-grained Retrieval for Robust Question Answering over Large Knowledge Base

- Structure-Unified M-Tree Coding Solver for Math Word Problem

- Rethinking Multi-Modal Alignment in Multi-Choice VideoQA from Feature and Sample Perspectives

- ASQA: Factoid Questions Meet Long-Form Answers

- Uni-Parser: Unified Semantic Parser for Question Answering on Knowledge Base and Database

- ReasTAP: Injecting Table Reasoning Skills During Pre-training via Synthetic Reasoning Examples

- Analogical Math Word Problems Solving with Enhanced Problem-Solution Association

- Knowledge Transfer from Answer Ranking to Answer Generation

- Empowering Language Models with Knowledge Graph Reasoning for Open-Domain Question Answering

19. Resources and Evaluation【资源和评测】

- On the Limitations of Reference-Free Evaluations of Generated Text

- GuoFeng: A Benchmark for Zero Pronoun Recovery and Translation

- GENIE: Toward Reproducible and Standardized Human Evaluation for Text Generation

- Three Real-World Datasets and Neural Computational Models for Classification Tasks in Patent Landscaping

- Agent-Specific Deontic Modality Detection in Legal Language

- SCROLLS: Standardized CompaRison Over Long Language Sequences

- "JDDC 2.1: A Multimodal Chinese Dialogue Dataset with Joint Tasks of Query Rewriting, Response Generation, Discourse Parsing, and Summarization"

- Multi-VQG: Generating Engaging Questions for Multiple Images

- "Tomayto, Tomahto. Beyond Token-level Answer Equivalence for Question Answering Evaluation"

- QRelScore: Better Evaluating Generated Questions with Deeper Understanding of Context-aware Relevance

- Generative Language Models for Paragraph-Level Question Generation

- "Cross-document Event Coreference Search: Task, Dataset and Modeling"

- M2D2: A Massively Multi-Domain Language Modeling Dataset

- "StoryER: Automatic Story Evaluation via Ranking, Rating and Reasoning"

- Linguistic Corpus Annotation for Automatic Text Simplification Evaluation

- Near-Negative Distinction: Giving a Second Life to Human Evaluation Datasets

- Stanceosaurus: Classifying Stance Towards Multicultural Misinformation

- When FLUE Meets FLANG: Benchmarks and Large Pretrained Language Model for Financial Domain

- Reproducibility in Computational Linguistics: Is Source Code Enough?

- MUSIED: A Benchmark for Event Detection from Multi-Source Heterogeneous Informal Texts

- Reproducibility Issues for BERT-based Evaluation Metrics

- On the Evaluation Metrics for Paraphrase Generation

- A Second Wave of UD Hebrew Treebanking and Cross-Domain Parsing

- MasakhaNER 2.0: Africa-centric Transfer Learning for Named Entity Recognition

- ExPUNations: Augmenting Puns with Keywords and Explanations

- Context Matters for Image Descriptions for Accessibility: Challenges for Referenceless Evaluation Metrics

- MetaLogic: Logical Reasoning Explanations with Fine-Grained Structure

- Super-NaturalInstructions: Generalization via Declarative Instructions on 1600+ NLP Tasks

- RuCoLA: Russian Corpus of Linguistic Acceptability

- Towards Knowledge-Intensive Text-to-SQL Semantic Parsing with Formulaic Knowledge

- PHEE: A Dataset for Pharmacovigilance Event Extraction from Text

- LILA: A Unified Benchmark for Mathematical Reasoning

- Transfer Learning with Synthetic Corpora for Spatial Role Labeling and Reasoning

- CEFR-Based Sentence Difficulty Annotation and Assessment

- Title2Event: Benchmarking Open Event Extraction with a Large-scale Chinese Title Dataset

- IDK-MRC: Unanswerable Questions for Indonesian Machine Reading Comprehension

- FigMemes: A Dataset for Figurative Language Identification in Politically-Opinionated Memes

- "ParaTag: A Dataset of Paraphrase Tagging for Fine-Grained Labels, NLG Evaluation, and Data Augmentation"

- Open-domain Video Commentary Generation

- EUR-Lex-Sum: A Multi- and Cross-lingual Dataset for Long-form Summarization in the Legal Domain

- DivEMT: Neural Machine Translation Post-Editing Effort Across Typologically Diverse Languages

- Human-Machine Collaboration Approaches to Build a Dialogue Dataset for Hate Speech Countering

- Revisiting DocRED - Addressing the False Negative Problem in Relation Extraction

- Bloom Library: Multimodal Datasets in 300+ Languages for a Variety of Downstream Tasks

- Hierarchical Multi-Label Classification of Scientific Documents

- Detecting Label Errors by Using Pre-Trained Language Models

- CN-AutoMIC: Distilling Chinese Commonsense Knowledge from Pretrained Language Models

- Improving Large-scale Paraphrase Acquisition and Generation

- A Survey of Computational Framing Analysis Approaches

- arXivEdits: Understanding the Human Revision Process in Scientific Writing

- DEMETR: Diagnosing Evaluation Metrics for Translation

- RobustLR: A Diagnostic Benchmark for Evaluating Logical Robustness of Deductive Reasoners

- CRIPP-VQA: Counterfactual Reasoning about Implicit Physical Properties via Video Question Answering

- Exploring Document-Level Literary Machine Translation with Parallel Paragraphs from World Literature

- A Dataset for Hyper-Relational Extraction and a Cube-Filling Approach

- A Fine-grained Chinese Software Privacy Policy Dataset for Sequence Labeling and Regulation Compliant Identification

- DiscoSense: Commonsense Reasoning with Discourse Connectives

- Evaluating the Knowledge Dependency of Questions

- Making Science Simple: Corpora for the Lay Summarisation of Scientific Literature

- KOLD: Korean Offensive Language Dataset

- ECTSum: A New Benchmark Dataset For Bullet Point Summarization of Long Earnings Call Transcripts

20. Semantics: Lexical, Sentence level, Textual Inference and Other areas【语义学:单词、句子层次、文本推理和其他领域】

- Measuring Context-Word Biases in Lexical Semantic Datasets

- Unobserved Local Structures Make Compositional Generalization Hard

- Mitigating Spurious Correlation in Natural Language Understanding with Counterfactual Inference

- Mutual Exclusivity Training and Primitive Augmentation to Induce Compositionality

- PCL: Peer-Contrastive Learning with Diverse Augmentations for Unsupervised Sentence Embeddings

- UnifiedSKG: Unifying and Multi-Tasking Structured Knowledge Grounding with Text-to-Text Language Models

- Reasoning Like Program Executors

- DocInfer: Document-level Natural Language Inference using Optimal Evidence Selection

- Infinite SCAN: An Infinite Model of Diachronic Semantic Change

- "R2F: A General Retrieval, Reading and Fusion Framework for Document-level Natural Language Inference"

- RASAT: Integrating Relational Structures into Pretrained Seq2Seq Model for Text-to-SQL

- Sentence Representation Learning with Generative Objective rather than Contrastive Objective

- Generating Literal and Implied Subquestions to Fact-check Complex Claims

- Exploring the Secrets Behind the Learning Difficulty of Meaning Representations for Semantic Parsing

- Understanding Jargon: Combining Extraction and Generation for Definition Modeling

- Exploiting Global and Local Hierarchies for Hierarchical Text Classification

- Semantic-aware Contrastive Learning for More Accurate Semantic Parsing

- Kernel-Whitening: Overcome Dataset Bias with Isotropic Sentence Embedding

- Inductive Relation Prediction with Logical Reasoning Using Contrastive Representations

- Open World Classification with Adaptive Negative Samples

- Neural-Symbolic Inference for Robust Autoregressive Graph Parsing via Compositional Uncertainty Quantification

- Learning Semantic Textual Similarity via Topic-informed Discrete Latent Variables

- Leveraging Affirmative Interpretations from Negation Improves Natural Language Understanding

- GraphQ IR: Unifying the Semantic Parsing of Graph Query Languages with One Intermediate Representation

- Retrofitting Multilingual Sentence Embeddings with Abstract Meaning Representation

- DEER: Descriptive Knowledge Graph for Explaining Entity Relationships

- FLUTE: Figurative Language Understanding through Textual Explanations

- QASem Parsing: Text-to-text Modeling of QA-based Semantics

- "Generate, Discriminate and Contrast: A Semi-Supervised Sentence Representation Learning Framework"

- Natural Language Deduction with Incomplete Information

- PromptBERT: Improving BERT Sentence Embeddings with Prompts

- Are representations built from the ground up? An empirical examination of local composition in language models

- Evaluating the Impact of Model Scale for Compositional Generalization in Semantic Parsing

- Breakpoint Transformers for Modeling and Tracking Intermediate Beliefs

- Looking at the Overlooked: An Analysis on the Word-Overlap Bias in Natural Language Inference

- Cross-domain Generalization for AMR Parsing

21. Sentiment Analysis, Stylistic Analysis, and Argument Mining【情感分析、文体分析和论点挖掘】

- Semantic Simplification for Sentiment Classification

- Curriculum Knowledge Distillation for Emoji-supervised Cross-lingual Sentiment Analysis

- "A Multifaceted Framework to Evaluate Evasion, Content Preservation, and Misattribution in Authorship Obfuscation Techniques"

- Mitigating Inconsistencies in Multimodal Sentiment Analysis under Uncertain Missing Modalities

- Curriculum Learning Meets Weakly Supervised Multimodal Correlation Learning

- COM-MRC: A COntext-Masked Machine Reading Comprehension Framework for Aspect Sentiment Triplet Extraction

- CEM: Machine-Human Chatting Handoff via Causal-Enhance Module

- Face-Sensitive Image-to-Emotional-Text Cross-modal Translation for Multimodal Aspect-based Sentiment Analysis

- A Span-level Bidirectional Network for Aspect Sentiment Triplet Extraction

- Efficient Nearest Neighbor Emotion Classification with BERT-whitening

- Sentiment-Aware Word and Sentence Level Pre-training for Sentiment Analysis

- AEG: Argumentative Essay Generation via A Dual-Decoder Model with Content Planning

- Pair-Based Joint Encoding with Relational Graph Convolutional Networks for Emotion-Cause Pair Extraction

- AX-MABSA: A Framework for Extremely Weakly Supervised Multi-label Aspect Based Sentiment Analysis

- Generative Data Augmentation with Contrastive Learning for Zero-Shot Stance Detection

- Text Style Transferring via Adversarial Masking and Styled Filling

- UniMSE: Towards Unified Multimodal Sentiment Analysis and Emotion Recognition

- Improving Aspect Sentiment Quad Prediction via Template-Order Data Augmentation

- Generative Entity-to-Entity Stance Detection with Knowledge Graph Augmentation

- Symptom Identification for Interpretable Detection of Multiple Mental Disorders on Social Media

- A Simple Contrastive Learning Framework for Interactive Argument Pair Identification via Argument-Context Extraction

- Prompt-based Distribution Alignment for Domain Generalization in Text Classification

- A Generative Model for End-to-End Argument Mining with Reconstructed Positional Encoding and Constrained Pointer Mechanism

22. Speech, Vision, Robotics, Multimodal Grounding【语音、视觉、机器人、多模态】

- PEVL: Position-enhanced Pre-training and Prompt Tuning for Vision-language Models

- Textless Speech Emotion Conversion using Discrete & Decomposed Representations

- Retrieval Augmented Visual Question Answering with Outside Knowledge

- Normalized Contrastive Learning for Text-Video Retrieval

- Robustness of Fusion-based Multimodal Classifiers to Cross-Modal Content Dilutions

- Abstract Visual Reasoning with Tangram Shapes

- Z-LaVI: Zero-Shot Language Solver Fueled by Visual Imagination

- DANLI: Deliberative Agent for Following Natural Language Instructions

- SpeechUT: Bridging Speech and Text with Hidden-Unit for Encoder-Decoder Based Speech-Text Pre-training

- Can Visual Context Improve Automatic Speech Recognition for an Embodied Agent?

- Why is Winoground Hard? Investigating Failures in Visuolinguistic Compositionality

- LVP-M3: Language-aware Visual Prompt for Multilingual Multimodal Machine Translation

- UniGeo: Unifying Geometry Logical Reasoning via Reformulating Mathematical Expression

- CPL: Counterfactual Prompt Learning for Vision and Language Models

- MGDoc: Pre-training with Multi-granular Hierarchy for Document Image Understanding

- TRIPS: Efficient Vision-and-Language Pre-training with Text-Relevant Image Patch Selection

- RelCLIP: Adapting Language-Image Pretraining for Visual Relationship Detection via Relational Contrastive Learning

- McQueen: a Benchmark for Multimodal Conversational Query Rewrite

- Discrete Cross-Modal Alignment Enables Zero-Shot Speech Translation

- An Anchor-based Relative Position Embedding Method for Cross-Modal Tasks

- GHAN: Graph-Based Hierarchical Aggregation Network for Text-Video Retrieval

- A Span-based Multimodal Variational Autoencoder for Semi-supervised Multimodal Named Entity Recognition

- Open-Domain Sign Language Translation Learned from Online Video

- ULN: Towards Underspecified Vision-and-Language Navigation

- Towards Unifying Reference Expression Generation and Comprehension

- mPLUG: Effective and Efficient Vision-Language Learning by Cross-modal Skip-connections

- Speaker Overlap-aware Neural Diarization for Multi-party Meeting Analysis

- SEMGraph: Incorporating Sentiment Knowledge and Eye Movement into Graph Model for Sentiment Analysis

- Extending Phrase Grounding with Pronouns in Visual Dialogues

- LiteVL: Efficient Video-Language Learning with Enhanced Spatial-Temporal Modeling

- Entity-Focused Dense Passage Retrieval for Outside-Knowledge Visual Question Answering

- Character-centric Story Visualization via Visual Planning and Token Alignment

- Contrastive Learning with Expectation-Maximization for Weakly Supervised Phrase Grounding

- Distilled Dual-Encoder Model for Vision-Language Understanding

- Weakly-Supervised Temporal Article Grounding

- Adaptive Contrastive Learning on Multimodal Transformer for Review Helpfulness Prediction

- FaD-VLP: Fashion Vision-and-Language Pre-training towards Unified Retrieval and Captioning

- End-to-End Unsupervised Vision-and-Language Pre-training with Referring Expression Matching

23. Summarization【文本摘要】

- How Far are We from Robust Long Abstractive Summarization?

- CiteSum: Citation Text-guided Scientific Extreme Summarization and Domain Adaptation with Limited Supervision

- Learning to Generate Overlap Summaries through Noisy Synthetic Data

- Toward Unifying Text Segmentation and Long Document Summarization

- SNaC: Coherence Error Detection for Narrative Summarization

- HydraSum: Disentangling Style Features in Text Summarization with Multi-Decoder Models

- SEM-F1: an Automatic Way for Semantic Evaluation of Multi-Narrative Overlap Summaries at Scale

- SQuALITY: Building a Long-Document Summarization Dataset the Hard Way

- Effective and Efficient Query-aware Snippet Extraction for Web Search

- Opinion Summarization by Weak-Supervision from Mix-structured Data

- Few-shot Query-Focused Summarization with Prefix-Merging

- Summarizing Community-based Question-Answer Pairs

- Scientific Paper Extractive Summarization Enhanced by Citation Graphs

- Analyzing and Evaluating Faithfulness in Dialogue Summarization

- Abstractive Summarization Guided by Latent Hierarchical Document Structure

- RACE: Retrieval-augmented Commit Message Generation

- CTRLsum: Towards Generic Controllable Text Summarization

- Leveraging Locality in Abstractive Text Summarization

- Salience Allocation as Guidance for Abstractive Summarization

- Factorizing Content and Budget Decisions in Abstractive Summarization of Long Documents

- Assist Non-native Viewers: Multimodal Cross-Lingual Summarization for How2 Videos

- X-FACTOR: A Cross-metric Evaluation of Factual Correctness in Abstractive Summarization

- ClidSum: A Benchmark Dataset for Cross-Lingual Dialogue Summarization

- Towards Summary Candidates Fusion

- Unsupervised Opinion Summarisation in the Wasserstein Space

- Why Do You Feel This Way? Summarizing Triggers of Emotions in Social Media Posts

- Evaluating and Improving Factuality in Multimodal Abstractive Summarization

- Referee: Reference-Free Sentence Summarization with Sharper Controllability through Symbolic Knowledge Distillation

- Learning with Rejection for Abstractive Text Summarization

- Correcting Diverse Factual Errors in Abstractive Summarization via Post-Editing and Language Model Infilling

- HEGEL: Hypergraph Transformer for Long Document Summarization

24. Syntax, Parsing and their Applications【语法、句法及其应用】

- SynGEC: Syntax-Enhanced Grammatical Error Correction with a Tailored GEC-Oriented Parser

- Learning a Grammar Inducer from Massive Uncurated Instructional Videos

- Unbiased and Efficient Sampling of Dependency Trees

- Algorithms for Acyclic Weighted Finite-State Automata with Failure Arcs

- On Parsing as Tagging

- Algorithms for Weighted Pushdown Automata

- "Reorder and then Parse, Fast and Accurate Discontinuous Constituency Parsing"

25. Theme Track【主题追踪】

- Towards Climate Awareness in NLP Research

- Whose Language Counts as High Quality? Measuring Language Ideologies in Text Data Selection

- Geographic Citation Gaps in NLP Research

- Neural Theory-of-Mind? On the Limits of Social Intelligence in Large LMs

- Structural generalization is hard for sequence-to-sequence models

- Questioning the Validity of Summarization Datasets and Improving Their Factual Consistency

- Missing Counter-Evidence Renders NLP Fact-Checking Unrealistic for Misinformation

- The Authenticity Gap in Human Evaluation

- Counterfactual Recipe Generation: Exploring Compositional Generalization in a Realistic Scenario

- Bridging Fairness and Environmental Sustainability in Natural Language Processing

- Towards Robust Numerical Question Answering: Diagnosing Numerical Capabilities of NLP Systems

- CONDAQA: A Contrastive Reading Comprehension Dataset for Reasoning about Negation

26. Unsupervised and Weakly-Supervised Methods in NLP【自然语言处理中的无监督和弱监督方法】

- Bilingual Lexicon Induction for Low-Resource Languages using Graph Matching via Optimal Transport

- CycleKQR: Unsupervised Bidirectional Keyword-Question Rewriting

- Zero-Shot Text Classification with Self-Training

- Fine-grained Category Discovery under Coarse-grained supervision with Hierarchical Weighted Self-contrastive Learning

- Learning Instructions with Unlabeled Data for Zero-Shot Cross-Task Generalization

- Fast-R2D2: A Pretrained Recursive Neural Network based on Pruned CKY for Grammar Induction and Text Representation

- Unsupervised Tokenization Learning

- FastClass: A Time-Efficient Approach to Weakly-Supervised Text Classification

- PASTA: Table-Operations Aware Fact Verification via Sentence-Table Cloze Pre-training

- Rethinking Style Transformer with Energy-based Interpretation: Adversarial Unsupervised Style Transfer using a Pretrained Model

- IsoVec: Controlling the Relative Isomorphism of Word Embedding Spaces

- Modal-specific Pseudo Query Generation for Video Corpus Moment Retrieval

- Beyond prompting: Making Pre-trained Language Models Better Zero-shot Learners by Clustering Representations